The essence of the simulation method. Simulation of economic processes: characteristics and main types

Read also

FEDERAL FISHERIES AGENCY

MINISTRY OF AGRICULTURE

KAMCHATKA STATE TECHNICAL UNIVERSITY

DEPARTMENT OF INFORMATION SYSTEMS

Topic: "IMITATION MODELING OF ECONOMIC

ACTIVITIES OF THE ENTERPRISE "

Course work

Manager: position

Bilchinskaya S.G. "__" ________ 2006

Developer: student gr.

Zhiteneva D.S. 04 Pi1 "__" ________ 2006

The work is protected "___" __________ 2006. with an estimate of ______

Petropavlovsk-Kamchatsky, 2006

Introduction ................................................. .................................................. ......................... 3

1. Theoretical foundations of simulation modeling .......................................... 4

1.1. Modeling. Simulation .......................................... 4

1.2. Monte Carlo method .............................................. ............................................ nine

1.3. Using the laws of distribution of random variables ....................... 12

1.3.1. Uniform distribution ................................................ ................ 12

1.3.2. Discrete distribution (general case) ....................................... 13

1.3.3. Normal distribution................................................ .................. fourteen

1.3.4. Exponential distribution ................................................ ...... 15

1.3.5. Generalized Erlang Distribution ............................................... .. 16

1.3.6. Triangular distribution ................................................ ................. 17

1.4. Planning a simulation computer experiment ................... 18

1.4.1. Cybernetic approach to the organization of experimental research of complex objects and processes ........................................ .................................................. ............. eighteen

1.4.2. Regression analysis and model experiment control. 19

1.4.3. Orthogonal planning of the second order ................................ 20

2. Practical work .............................................. .................................................. ..... 22

3. Conclusions on the Business Model "Production Efficiency" ................................... 26

Conclusion................................................. .................................................. ..................... 31

Bibliography............................................... ................................... 32

APPENDIX A ................................................ .................................................. .......... 33

APPENDIX B ................................................ .................................................. ........... 34

APPENDIX B ................................................ .................................................. ........... 35

APPENDIX D ................................................ .................................................. ........... 36

APPENDIX E ................................................ .................................................. ........... 37

APPENDIX E ................................................ .................................................. ........... 38

INTRODUCTION

Modeling in economics began to be applied long before economics finally took shape as an independent scientific discipline. Mathematical models were used by F. Quesnay (1758 Economic Table), A. Smith (classical macroeconomic model), D. Ricardo (model of international trade). In the 19th century, the mathematical school made a great contribution to modeling (L. Walras, O. Cournot, V Pareto, F. Edgeworth, etc.). In the XX century, the methods of mathematical modeling of the economy were used very widely and their use is associated with the outstanding works of the Nobel Prize laureates in economics (D. Hicks, R. Solow, V. Leontiev, P. Samuelson).

Course work on the subject "Simulation of economic processes" is an independent educational and research work.

The purpose of writing this course work is to consolidate theoretical and practical knowledge. Coverage of approaches and methods of applying simulation modeling in project economic activities.

The main task is to investigate, using simulation modeling, the effectiveness of the economic activity of the enterprise.

1. THEORETICAL BASIS OF SIMULATION

1.1. Modeling. Simulation modeling

In the process of managing various processes, the need to predict the results in certain conditions constantly arises. To speed up the decision to choose the optimal control option and save money for the experiment, process modeling is used.

Modeling is the transfer of the properties of one system, which is called the object of modeling, to another system, which is called the model of the object, the impact on the model is carried out in order to determine the properties of the object by the nature of its behavior.

Such a replacement (transfer) of the properties of an object has to be done in cases where its direct study is difficult or even impossible. As the practice of modeling shows, replacing an object with its model often gives a positive effect.

A model is a representation of an object, system or concept (idea) in some form, different from the form of their real existence. A model of an object can be either an exact copy of this object (albeit made from a different material and on a different scale), or display some of the characteristic properties of an object in an abstract form.

Simultaneously, in the process of modeling, it is possible to obtain reliable information about the object with less time, finances, funds and other resources.

The main goals of modeling are:

1) analysis and determination of properties of objects according to the model;

2) designing new systems and solving optimization problems on the model (finding the best option);

3) management of complex objects and processes;

4) predicting the behavior of an object in the future.

The following types of modeling are most common:

1) mathematical;

2) physical;

3) imitation.

In mathematical modeling, the object under study is replaced by the corresponding mathematical relations, formulas, expressions, with the help of which certain analytical problems are solved (analysis is done), optimal solutions are found, and forecasts are also made.

Physical models are real systems of the same nature as the investigated object, or a different one. The most typical option for physical modeling is the use of mock-ups, installations, or the selection of fragments of an object for conducting limited experiments. And most widely it found application in the natural sciences, sometimes in economics.

For complex systems, which include economic, social, information and other socio-information systems, simulation modeling has found wide application. This is a widespread type of analog modeling, implemented using a set of mathematical tools for special simulating computer programs and programming technologies, which allow, through analogous processes, to conduct a targeted study of the structure and functions of a real complex process in the computer memory in the "simulation" mode, to optimize some of its parameters.

To obtain the necessary information or results, it is necessary to “run” the simulation models, not “solve” them. Simulation models are not able to form their own solution in the form in which it takes place in analytical models, but can only serve as a means for analyzing the behavior of the system under conditions determined by the experimenter.

Therefore, simulation is not a theory, but a problem solving methodology. Moreover, simulation is only one of several critical problem-solving techniques available to the systems analyst. Since it is necessary to adapt the tool or method to the solution of the problem, and not vice versa, a natural question arises: in what cases is simulation modeling useful?

The need to solve problems through experimentation becomes obvious when there is a need to obtain specific information about the system that cannot be found in known sources. Experimenting directly on a real system removes a lot of the hassle if it is necessary to ensure consistency between the model and real conditions; however, the disadvantages of this experimentation are sometimes quite significant:

1) may violate the established procedure for the work of the company;

2) if people are an integral part of the system, then the results of experiments can be influenced by the so-called hathorn effect, which manifests itself in the fact that people, feeling that they are being watched, can change their behavior;

3) it can be difficult to maintain the same operating conditions with each repetition of the experiment or throughout the entire duration of a series of experiments;

4) obtaining the same sample size (and, therefore, the statistical significance of the experimental results) may require an excessive investment of time and money;

5) when experimenting with real systems, it may be impossible to explore many alternatives.

For these reasons, the investigator should consider the feasibility of applying simulation when any of the following conditions exist:

1. There is no complete mathematical formulation of this problem, or analytical methods for solving the formulated mathematical model have not yet been developed. Many queuing models related to the consideration of queues fall into this category.

2. Analytical methods are available, but the mathematical procedures are so complex and time consuming that simulation provides an easier way to solve the problem.

3. Analytical solutions exist, but their implementation is impossible due to insufficient mathematical training of the existing staff. In this case, the costs of designing, testing and running on the simulation model should be weighed against the costs associated with outsourcing.

4. In addition to evaluating certain parameters, it is advisable to observe the process during a certain period on a simulation model.

5. Simulation modeling may turn out to be the only possibility due to the difficulties of setting up experiments and observing phenomena in real conditions (for example, studying the behavior of spaceships under conditions of interplanetary flights).

6. For long-term operation of systems or processes, it may be necessary to compress the timeline. Simulation provides the ability to fully control the time of the process under study, since the phenomenon can be slowed down or accelerated at will (for example, research on the problems of urban decline).

An additional benefit simulation modeling can be considered the broadest possibilities of its application in the field of education and training. The development and use of a simulation model allows the experimenter to see and test real processes and situations on the model. This, in turn, should greatly help to understand and feel the problem, which stimulates the search for innovations.

Simulation modeling is implemented by means of a set of mathematical tools, special computer programs and techniques that allow using a computer to carry out targeted modeling in the mode of "imitation" of the structure and functions of a complex process and optimization of some of its parameters. A set of software tools and modeling techniques determines the specifics of the modeling system - special software.

Simulation of economic processes is usually used in two cases:

1. to manage a complex business process, when the simulation model of a controlled economic object is used as a tool in the loop of an adaptive control system created on the basis of information technologies;

2. when conducting experiments with discrete-continuous models of complex economic objects to obtain and "observe" their dynamics in emergency situations associated with risks, the full-scale modeling of which is undesirable or impossible.

Simulation modeling as a special information technology consists of the following main stages:

1. Structural analysis of processes... At this stage, the structure of a complex real process is analyzed and it is decomposed into simpler interconnected subprocesses, each of which performs a specific function. The identified sub-processes can be subdivided into other simpler sub-processes. Thus, the structure of the process being modeled can be represented as a graph with a hierarchical structure.

Structural analysis is especially effective in modeling economic processes, where many of the constituent sub-processes occur visually and do not have a physical essence.

2. Formalized model description... The resulting graphic image of the simulation model, the functions performed by each subprocess, the conditions for the interaction of all subprocesses must be described in a special language for subsequent translation.

This can be done in various ways: it can be described manually in a specific language or with the help of a computer graphic designer.

3. Building the model... This stage includes translation and editing of links, as well as verification of parameters.

4. Carrying out an extreme experiment... At this stage, the user can get information about how close the created model is to a real-life phenomenon, and how suitable this model is for studying new, not yet tested values of arguments and system parameters.

1.2. Monte Carlo method

Statistical Monte Carlo testing is the simplest simulation in the absence of any rules of conduct. Obtaining samples using the Monte Carlo method is the main principle of computer modeling of systems containing stochastic or probabilistic elements. The origin of the method is associated with the work of von Neumann and Ulan in the late 1940s, when they introduced the name “Monte Carlo” for it and applied it to solving some problems of shielding nuclear radiation. This mathematical method was known earlier, but found its rebirth in Los Alamos in closed works on nuclear technology, which were carried out under the code designation "Monte Carlo". The application of the method turned out to be so successful that it became widespread in other areas, in particular in economics.

Therefore, many specialists sometimes consider the term "Monte Carlo method" synonymous with the term "simulation modeling", which is generally incorrect. Simulation modeling is a broader concept, and the Monte Carlo method is an important, but far from the only methodological component of simulation modeling.

According to the Monte Carlo method, the designer can simulate the operation of thousands of complex systems that control thousands of types of similar processes, and investigate the behavior of the entire group, processing statistical data. Another way of using this method is to simulate the behavior of the control system over a very long period of model time (several years), and the astronomical time of the simulation program execution on a computer can be fractions of a second.

In a Monte Carlo analysis, the computer uses a pseudo-random number generation procedure to simulate data from the population of interest. The Monte Carlo analysis procedure builds samples from the population as instructed by the user, and then does the following: simulates a random sample from the population, analyzes the sample, and saves the results. After a large number of repetitions, the stored results mimic the actual distribution of the sample statistic well.

In various problems encountered in the creation of complex systems, quantities whose values are determined randomly can be used. Examples of such quantities are:

1 random moments in time at which orders are received for the firm;

3 external influences (requirements or changes in laws, payments of fines, etc.);

4 payment of bank loans;

5 receipt of funds from customers;

6 measurement errors.

A number, a collection of numbers, a vector, or a function can be used as their corresponding variables. One of the varieties of the Monte Carlo method for the numerical solution of problems involving random variables is the statistical test method, which consists in simulating random events.

The Monte Carlo method is based on statistical tests and is extremal in nature; it can be used to solve fully deterministic problems, such as matrix inversion, solving partial differential equations, finding extrema, and numerical integration. In Monte Carlo calculations, statistical results are obtained by repeated tests. The probability that these results differ from the true ones by no more than a given value is a function of the number of trials.

Monte Carlo calculations are based on a random selection of numbers from a given probability distribution. In practical calculations, these numbers are taken from tables or obtained by some operations, the results of which are pseudo-random numbers with the same properties as numbers obtained by random sampling. There are a large number of computational algorithms that allow you to obtain long sequences of pseudo-random numbers.

One of the simplest and most efficient computational methods for obtaining a sequence of uniformly distributed random numbers r i, using, for example, a calculator or any other device operating in the decimal system, includes only one multiplication operation.

The method is as follows: if r i = 0.0040353607, then r i + 1 = (40353607ri) mod 1, where mod 1 means the operation of extracting only the fractional part after the decimal point from the result. As described in various literature sources, the numbers r i start repeating after a cycle of 50 million numbers, so r 5oooooo1 = r 1. The sequence r 1 is obtained uniformly distributed over the interval (0, 1).

The use of the Monte Carlo method can give a significant effect in modeling the development of processes, the natural observation of which is undesirable or impossible, and other mathematical methods for these processes are either not developed or unacceptable due to numerous reservations and assumptions that can lead to serious errors or wrong conclusions. In this regard, it is necessary not only to observe the development of the process in undesirable directions, but also to evaluate hypotheses about the parameters of undesirable situations that such a development will lead to, including the parameters of risks.

1.3. Using the laws of distribution of random variables

For a qualitative assessment of a complex system, it is convenient to use the results of the theory of random processes. The experience of observing objects shows that they function under the influence of a large number of random factors. Therefore, predicting the behavior of a complex system can make sense only within the framework of probabilistic categories. In other words, for expected events, only the probabilities of their occurrence can be indicated, and for some values one has to restrict oneself to the laws of their distribution or other probabilistic characteristics (for example, mean values, variances, etc.).

To study the process of functioning of each specific complex system, taking into account random factors, it is necessary to have a fairly clear idea of the sources of random influences and very reliable data on their quantitative characteristics. Therefore, any calculation or theoretical analysis associated with the study of a complex system is preceded by the experimental accumulation of statistical material characterizing the behavior of individual elements and the system as a whole in real conditions. Processing this material allows you to obtain the initial data for the calculation and analysis.

The distribution law of a random variable is a ratio that allows you to determine the probability of the appearance of a random variable in any interval. It can be set tabularly, analytically (in the form of a formula) and graphically.

There are several laws of distribution of random variables.

1.3.1. Even distribution

This type of distribution is used to obtain more complex distributions, both discrete and continuous. Such distributions are obtained using two basic techniques:

a) inverse functions;

b) combinations of quantities distributed according to other laws.

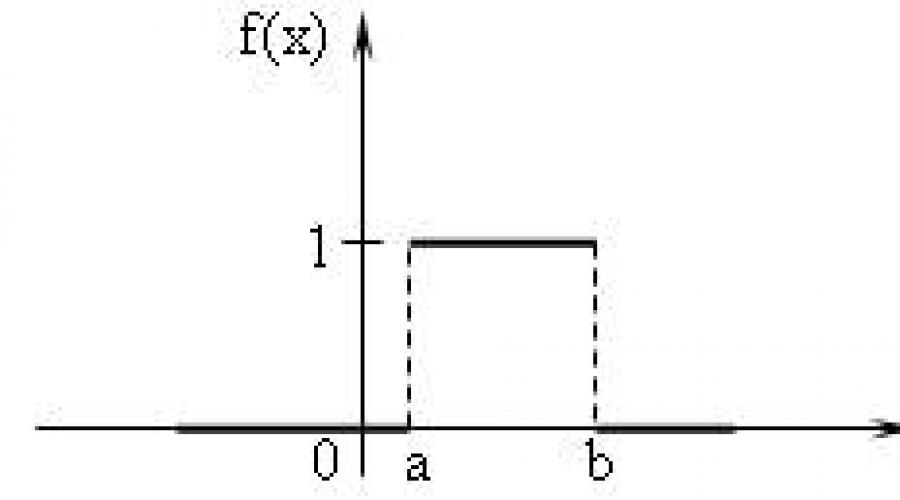

Uniform law is a symmetric distribution law of random variables (rectangle). The density of uniform distribution is given by the formula:

i.e., on the interval to which all possible values of the random variable belong, the density remains constant (Fig. 1).

Fig. 1 Probability density function and characteristics of uniform distribution

In simulation models of economic processes, uniform distribution is sometimes used to simulate simple (one-stage) work, when calculating according to network schedules of work, in military affairs - to simulate the timing of travel by subdivisions, the time of digging trenches and the construction of fortifications.

A uniform distribution is used if the only thing known about time intervals is that they have the maximum spread, and nothing is known about the probability distributions of these intervals.

1.3.2. Discrete distribution

Discrete distribution is represented by two laws:

1) binomial, where the probability of an event occurring in several independent tests is determined by the Bernoulli formula:

n - number of independent tests

m is the number of occurrences of an event in n tests.

2) the Poisson distribution, where, with a large number of tests, the probability of an event occurring is very small and is determined by the formula:

k is the number of occurrences of an event in several independent trials

Average number of occurrences of an event across multiple independent trials.

1.3.3. Normal distribution

The normal, or Gaussian, distribution is undoubtedly one of the most important and commonly used types of continuous distributions. It is symmetrical about the mathematical expectation.

Continuous random variable t has a normal probability distribution with parameters T and > Oh, if its probability density has the form (Fig. 2, Fig. 3):

where T- expected value M [t];

Fig. 2, Fig. 3 Probability density function and characteristics of the normal distribution

Any complex work on the objects of the economy consists of many short, sequential elementary components of work. Therefore, when estimating labor costs, it is always true that their duration is a random variable distributed according to the normal law.

In simulation models of economic processes, the law of normal distribution is used to model complex multi-stage work.

1.3.4. Exponential distribution

It also occupies a very important place in the systematic analysis of economic activity. Many phenomena obey this distribution law, for example:

1 time of receipt of the order for the enterprise;

2 customer visits to a supermarket;

3 telephone conversations;

4 the service life of parts and assemblies in a computer installed, for example, in accounting.

The exponential distribution function looks like this:

F (x) = at 0 Exponential distribution parameter,> 0. Exponential distributions are special cases of the gamma distribution. Rice. 5 Probability density function of gamma distribution In simulation models of economic processes, exponential distribution is used to model the intervals of incoming orders from multiple customers to a firm. In reliability theory, it is used to simulate the time interval between two successive faults. In communications and computer science - for modeling information flows. 1.3.5. Generalized Erlang Distribution P (t) = for t≥0; where K-elementary sequential components, distributed according to the exponential law. The generalized Erlang distribution is used to create both mathematical and simulation models. It is convenient to use this distribution instead of the normal distribution if the model is reduced to a purely mathematical problem. In addition, in real life there is an objective probability of the appearance of groups of applications as a reaction to some actions, therefore, group flows arise. The use of purely mathematical methods for studying the effects of such group flows in models is either impossible due to the lack of a way to obtain an analytical expression, or it is difficult, since analytical expressions contain a large systematic error due to numerous assumptions due to which the researcher was able to obtain these expressions. The generalized Erlang distribution can be used to describe one of the types of group flow. The emergence of group flows in complex economic systems leads to a sharp increase in the average duration of various delays (orders in queues, payment delays, etc.), as well as to an increase in the likelihood of risk events or insured events. 1.3.6. Triangular distribution Triangular distribution is more informative than uniform distribution. For this distribution, three quantities are determined - minimum, maximum and mode. The density function graph consists of two line segments, one of which increases with a change X from the minimum value to the mode, and the other decreases when changing X from mode value to maximum. The value of the mathematical expectation of a triangular distribution is equal to one third of the sum of the minimum, mode and maximum. The triangular distribution is used when the most probable value on a certain interval is known and the piecewise linear nature of the density function is assumed. Fig.5 Probability density function and characteristics of triangular distribution. The triangular distribution is easy to apply and interpret, but there must be a good reason for choosing it. In simulation models of economic processes, such a distribution is sometimes used to model the time of access to databases. 1.4. Planning a simulation computer experiment The simulation model, regardless of the chosen modeling system (for example, Pilgrim or GPSS), allows one to obtain the first two points and information about the distribution law of any quantity of interest to the experimenter (an experimenter is a subject who needs qualitative and quantitative conclusions about the characteristics of the process under study). 1.4.1. Cybernetic approach to the organization of experimental research of complex objects and processes. Experiment planning can be viewed as a cybernetic approach to organizing and conducting experimental research on complex objects and processes. The main idea of the method is the possibility of optimal control of an experiment under conditions of uncertainty, which is akin to the premises on which cybernetics is based. The purpose of most research works is to determine the optimal parameters of a complex system or the optimal conditions for the process: 1.determining the parameters of an investment project in conditions of uncertainty and risk; 2. the choice of structural and electrical parameters of the physical installation, providing the most advantageous mode of its operation; 3. obtaining the maximum possible reaction yield by varying the temperature, pressure and the ratio of reagents - in the tasks of chemistry; 4. selection of alloying components to obtain an alloy with the maximum value of any characteristic (toughness, tensile strength, etc.) - in metallurgy. When solving problems of this kind, it is necessary to take into account the influence of a large number of factors, some of which are not amenable to regulation and control, which extremely complicates a complete theoretical study of the problem. Therefore, they follow the path of establishing the basic laws through a series of experiments. The researcher was able to express the results of the experiment in a form convenient for their analysis and use through simple calculations. 1.4.2. Regression analysis and model experiment control Fig. 7 Example of averaging the experimental results Scatter of values η v in this case is determined not only by measurement errors, but mainly by the influence of interference z j... The complexity of the optimal control problem is characterized not only by the complexity of the dependence itself η v (v = 1, 2,…, n) but also the influence z j, which introduces an element of randomness into the experiment. Dependency graph η v (x i) determines the correlation between the quantities η v and x i, which can be obtained from the results of the experiment using the methods of mathematical statistics. Calculation of such dependencies for a large number of input parameters x i and significant influence of interference z j and is the main task of the researcher-experimenter. Moreover, the more difficult the task, the more effective the application of experimental planning methods becomes. There are two types of experiment: Passive; Active. At passive experiment the researcher only monitors the process (changes in its input and output parameters). Based on the results of the observations, a conclusion is made about the influence of the input parameters on the output. A passive experiment is usually performed on the basis of an ongoing economic or production process that does not allow active intervention by the experimenter. This method is not expensive, but it takes a lot of time. Active experiment is carried out mainly in laboratory conditions, where the experimenter has the ability to change the input characteristics according to a predetermined plan. Such an experiment leads to the goal faster. The corresponding approximation methods are called regression analysis. Regression analysis is a methodological toolkit for solving problems of forecasting, planning and analysis of economic activities of enterprises. The tasks of regression analysis are to establish the form of dependence between variables, to evaluate the regression function and to establish the influence of factors on the dependent variable, to estimate unknown values (forecast values) of the dependent variable. 1.4.3. Orthogonal planning of the second order. Orthogonal planning of the experiment (in comparison with non-orthogonal) reduces the number of experiments and greatly simplifies the calculations when obtaining the regression equation. However, such planning is feasible only if it is possible to conduct an active experiment. A practical tool for finding an extremum is a factorial experiment. The main advantages of the factorial experiment are simplicity and the possibility of finding an extreme point (with some error) if the unknown surface is sufficiently smooth and there are no local extrema. Two main disadvantages of the factorial experiment should be noted. The first one is that it is impossible to search for an extremum in the presence of stepwise discontinuities of an unknown surface and local extrema. The second - in the absence of means for describing the nature of the surface near the extreme point due to the use of the simplest linear regression equations, which affects the inertia of the control system, since in the control process it is necessary to carry out factorial experiments to select control actions. For control purposes, second-order orthogonal scheduling is most appropriate. An experiment usually consists of two stages. First, using a factorial experiment, an area is found where an extreme point exists. Then, in the region of existence of an extreme point, an experiment is carried out to obtain a regression equation of the 2nd order. The second-order regression equation allows you to immediately determine the control actions, without additional experiments or experiments. Additional experiment will be required only in cases where the response surface changes significantly under the influence of uncontrollable external factors (for example, a significant change in tax policy in the country will seriously affect the response surface reflecting the production costs of the enterprise 2. PRACTICAL WORK. In this section, we look at how the above theoretical knowledge can be applied to specific economic situations. The main objective of our coursework is to determine the effectiveness of an enterprise engaged in commercial activities For the implementation of the project, we have chosen the Pilgrim package. The Pilgrim package has a wide range of capabilities for simulating the temporal, spatial and financial dynamics of simulated objects. It can be used to create discrete-continuous models. The developed models have the property of collective management of the modeling process. Any blocks can be inserted into the model text using the standard C ++ language. The Pilgrim package is portable, i.e. porting to any other platform with a C ++ compiler. Models in the Pilgrim system are compiled and therefore have high performance, which is very important for working out management decisions and adaptive selection of options in a super-accelerated time scale. The object code obtained after compilation can be embedded into the developed software systems or transferred (sold) to the customer, since the tools of the Pilgrim package are not used when operating the models. The fifth version of Pilgrim is a software product created in 2000 on an object-oriented basis and taking into account the main positive properties of previous versions. The advantages of this system: Focus on joint modeling of material, informational and "monetary" processes; The presence of a developed CASE-shell, which allows constructing multilevel models in the mode of structural system analysis; Availability of interfaces with databases; The ability for the end user of the models to directly analyze the results thanks to the formalized technology for creating functional windows for observing the model using Visual C ++, Delphi or other means; The ability to control models directly in the process of their execution using special dialog boxes. Thus, the Pilgrim package is a good tool for creating both discrete and continuous models, has many advantages and greatly simplifies model creation. The object of observation is an enterprise that is engaged in the sale of the manufactured goods. For statistical analysis of the data on the functioning of the enterprise and comparison of the results obtained, all factors influencing the process of production and sale of goods were compared. The enterprise is engaged in the release of goods in small batches (the size of these batches is known). There is a market where these products are sold. The batch size of the purchased goods is generally a random variable. The structural diagram of the business process contains three layers. On two layers there are autonomous processes "Production" (Appendix A) and "Sales" (Appendix B), the schemes of which are independent from each other, since there are no ways to transfer transactions. The indirect interaction of these processes is carried out only through resources: material resources (in the form of finished products) and monetary resources (mainly through the current account). The management of cash resources takes place on a separate layer - in the process of "Cash transactions" (Appendix B). Let us introduce an objective function: the delay time of payments from the settlement account of Trs. Main control parameters: 1 unit price; 2 volume of the produced batch; 3 the amount of the loan requested from the bank. Fixing all other parameters: 4 batch release time; 5 the number of production lines; 6 interval of receipt of orders from buyers; 7 the range of sizes of the sold lot; 8 the cost of components and materials for the release of the batch; 9 starting capital on the current account; it is possible to minimize Trc for a specific market situation. The minimum Trc is achieved at one of the maximums of the average amount of money in the current account. Moreover, the probability of a risk event - non-payment of loan debts - is close to a minimum (this can be proved during a statistical experiment with the model). The first process " Production»(Appendix A) implements the basic elementary processes. Node 1 simulates the receipt of orders for the manufacture of batches of products from the company's management. Node 2 - an attempt to get a loan. An auxiliary transaction appears in this node - a request to the bank. Node 3 - waiting for a loan with this request. Node 4 is the administration of the bank: if the previous loan is returned, then a new one is provided (otherwise, the request is waiting in the queue). Node 5 transfers the loan to the company's current account. In node 6, the auxiliary request is destroyed, but the information that the loan has been provided is a “barrier” on the path of the next request for another loan (hold operation). The main transaction order passes through node 2 without delay. In node 7, payment for components is made if there is a sufficient amount on the current account (even if the loan has not been received). Otherwise, there is an expectation of either a loan or payment for the products sold. At node 8, a transaction is queued if all production lines are busy. Node 9 is manufacturing a batch of products. At node 10, an additional request for a loan repayment appears if the loan was previously allocated. This application goes to node 11, where money is transferred from the company's current account to the bank; if there is no money, then the application is awaiting. After the loan is repaid, this application is destroyed (in node 12); the bank has information that the loan has been repaid, and the company can issue the next loan (rels operation). The transaction order passes through node 10 without delay, and at node 13 it is destroyed. Further, it is considered that the batch is made and arrived at the finished product warehouse. The second process " Sales»(Appendix B) simulates the main functions for the sale of products. Node 14 is a generator of product buyer transactions. These transactions go to the warehouse (node 15), and if there is the requested quantity of goods, then the goods are released to the buyer; otherwise, buyer waits. Node 16 simulates goods issue and queue control. After receiving the goods, the buyer transfers the money to the company's current account (node 17). At node 18, the customer is considered served; the corresponding transaction is no longer needed and is destroyed. The third process “ Cash transactions»(Appendix B) simulates transactions in accounting. Posting requests come from the first layer from nodes 5, 7, 11 (process "Production") and from node 17 (process "Sales"). The dotted lines show the movement of funds on Account 51 ("Settlement account", node 20), account 60 ("Suppliers, contractors", node 22), account 62 ("Buyers, customers", node 21) and on account 90 (" Bank ", node 19). The conventional numbers roughly correspond to the chart of accounts of accounting. Node 23 mimics the work of a CFO. The processed transactions after accounting entries go back to the nodes where they came from; the numbers of these nodes are in the t → updown transaction parameter. The source code of the model is presented in Appendix D. This source code builds the model itself, i.e. creates all the nodes (represented in the structural diagram of the business process) and the links between them. The code can be generated by the Pilgrim (Gem) constructor, in which the processes are built in an object form (Appendix E). The model is created using Microsoft Developer Studio. Microsoft Developer Studio is a C ++ based application development package. After attaching additional libraries (Pilgrim.lib, comctl32.lib) and resource files (Pilgrim.res) to the project, we compile this model. After compilation, we get a ready-made model. A report file is automatically created, which stores the simulation results obtained after one run of the model. The report file is presented in Appendix D. 3. CONCLUSIONS ON THE BUSINESS MODEL "PRODUCTION EFFICIENCY" 1) Node number; 2) Node name; 3) Node type; 5) M (t) average waiting time; 6) Counter of inputs; 7) Remaining transactions; 8) The state of the node at this moment. The model consists of three independent processes: the main production process (Appendix A), the product sales process (Appendix B) and the cash flow management process (Appendix C). The main production process.

During the period of modeling the business process in node 1 ("Orders"), 10 applications for the manufacture of products were generated. The average order formation time is 74 days, as a result, one transaction was not included in the time frame of the modeling process. The remaining 9 transactions entered node 2 (“Fork1”), where the corresponding number of requests to the bank for a loan was created. The average waiting time is 19 days, which is the simulation time in which all transactions were satisfied. Further, it can be seen that 8 requests received a positive response in node 3 ("Issuance authorization"). The average time for obtaining a permit is 65 days. The load of this node averaged 70.4%. The state of the node at the end of the simulation time is closed, this is due to the fact that this node provides a new loan only if the previous one is returned, therefore, the loan at the end of the simulation has not been repaid (this can be seen from node 11). Node 5 transfers the loan to the company's current account. And, as can be seen from the table of results, the bank transferred 135,000 rubles to the company's account. At node 6, all 11 credit requests were destroyed. In node 7 ("Payments to suppliers"), payment for components was made in the amount of the entire loan received earlier (135,000 rubles). At node 8, we see that 9 transactions are queued. This happens when all production lines are busy. In node 9 ("Order fulfillment"), the direct production of products is carried out. It takes 74 days to make one batch of products. During the simulation period, 9 orders were completed. The load of this node was 40%. In node 13, orders for the manufacture of products were destroyed in the amount of 8 pieces. with the expectation that the batches are made and arrived at the warehouse. The average production time is 78 days. At node 10 (“Fork 2”), 0 additional applications for loan repayment were created. These applications were received at node 11 ("Return"), where a loan of 120,000 rubles was returned to the bank. After the loan was repaid, 7 applications for refund were destroyed in node 12. with an average time of 37 days. Product sales process.

Node 14 (Clients) generated 26 transactions-buyers of products with an average time of 28 days. One transaction is waiting in the queue. Then 25 transactions-buyers "turned" to the warehouse (node 15) for the goods. The warehouse utilization during the simulation period was 4.7%. Products from the warehouse were issued immediately - without delay. As a result of the issuance of products to customers, 1077 units remained in the warehouse. products, the receipt of the goods is not expected in the queue, therefore, upon receipt of the order, the enterprise can issue the required quantity of goods directly from the warehouse. Node 16 simulates the delivery of products to 25 customers (1 transaction in the queue). After receiving the goods, the customers paid for the received goods in the amount of 119160 rubles without delay. At node 18, all served transactions were destroyed. Cash flow management process.

In this process, we are dealing with the following accounting entries (requests for which come from nodes 5, 7, 11 and 17, respectively): 1 loan issued by the bank - 135,000 rubles; 2 payment to suppliers for components - 135,000 rubles; 3 bank loan repayment - 120,000 rubles; 4 funds from the sale of products - 119160 rubles were received on the current account. As a result of these postings, we received the following data on the distribution of funds across accounts: 1) Count. 90: Bank. 9 transactions were processed, one is waiting in the queue. The balance of funds is 9,970,000 rubles. Required - 0 rubles. 2) Count. 51: R / account. 17 transactions are served, one is waiting in the queue. The balance of funds is 14260 rubles. Required - 15,000 rubles. Consequently, when the simulation time is extended, the transaction in the queue cannot be serviced immediately due to the lack of funds on the company's account. 3) Count. 61: Customers. 25 transactions served. The balance of funds is 9,880,840 rubles. Required - 0 rubles. 4) Count. 60: Suppliers. 0 transactions serviced (the “Delivery of goods” process was not considered in this experiment). The balance of funds is 135,000 rubles. Required - 0 rubles. Node 23 mimics the work of a CFO. They served 50 transactions Analysis of the "Delay dynamics" graph.

As a result of running the model, in addition to the file containing tabular information, we get a graph of the dynamics of delays in the queue (Fig. 9). The graph of the dynamics of delays in the queue in the node "Calc. score 51 ”indicates that the delay increases over time. Delay of payments from the company's current account ≈ 18 days. This is a fairly high figure. As a result, the company makes payments less and less, and soon it is possible that the delay time exceeds the waiting time of the lender - this can lead to bankruptcy of the company. But, fortunately, these delays are not frequent, and therefore, this is a plus for this model. This situation can be resolved by minimizing the delay in payments for a specific market situation. The minimum delay time will be reached at one of the maximums of the average amount of money in the current account. In this case, the probability of non-payment of debts on loans will be close to a minimum. Evaluating the effectiveness of the model.

Based on the description of the processes, we can conclude that the processes of production and sales of products in general work effectively. The main problem of the model is the cash flow management process. The main problem of this process is debts to repay a bank loan, thereby causing a shortage of funds in the current account, which will not allow free manipulation of the funds received, because they need to be directed to repay the loan. As we learned from the analysis of the "Delay dynamics" graph, in the future the company will be able to repay accounts payable on time, but not always in clearly indicated lines Therefore, we can conclude that at the moment the model is quite effective, but requires the smallest improvements. The generalization of the results of statistical processing of information was carried out by analyzing the results of the experiment. The graph of delays in the "Settlement account" node shows that, throughout the entire simulation period, the delays in the node are mostly at the same level, although delays appear occasionally. It follows that the increase in the likelihood of a situation where an enterprise may be on the verge of bankruptcy is extremely low. Therefore, the model is quite acceptable, but, as mentioned above, it requires minor improvements. CONCLUSION Systems that are complex in terms of internal connections and large in terms of the number of elements are economically difficult to direct methods of modeling and often, for construction and study, they switch to simulation methods. The emergence of the latest information technologies increases not only the capabilities of modeling systems, but also allows the use of a wider variety of models and methods of their implementation. The improvement of computing and telecommunications technology has led to the development of computer modeling methods, without which it is impossible to study processes and phenomena, as well as the construction of large and complex systems. Based on the work done, we can say that the importance of modeling in the economy is very great. Therefore, a modern economist should be well versed in economic and mathematical methods, be able to practically apply them to simulate real economic situations. This makes it possible to better assimilate the theoretical issues of the modern economy, contributes to the improvement of the level of qualifications and the general professional culture of a specialist. With the help of various business models, it is possible to describe economic objects, patterns, connections and processes not only at the level of an individual firm, but also at the state level. And this is a very important fact for any country: it is possible to predict ups and downs, crises and stagnation in the economy. BIBLIOGRAPHY 1. Emelyanov A.A., Vlasova E.A. Computer modeling - M .: Moscow state. University of Economics, Statistics and Informatics, 2002. 2. Locks OO, Tolstopyatenko AV, Cheremnykh Yu.N. Mathematical Methods in Economics, M., Business and Service, 2001. 3. Kolemaev VA, Mathematical Economics, M., UNITI, 1998. 4. Naylor T. Machine simulation experiments with models of economic systems. - M .: Mir, 1975 .-- 392 p. 5. Councils B.Ya., Yakovlev S.A. System modeling. - M .: Higher. Shk., 2001. 6. Shannon R.E. Simulation of systems: science and art. - M .: Mir, 1978. 7.www.thrusta.narod.ru APPENDIX A Business model diagram "Enterprise efficiency" APPENDIX B The process of selling products of the business model "Enterprise Efficiency" APPENDIX B The cash flow management process of the Enterprise Efficiency business model APPENDIX D Model source code APPENDIX E Model Report File APPENDIX E Students, graduate students, young scientists who use the knowledge base in their studies and work will be very grateful to you. University of International Business. On the topic: Simulation modeling in economics Completed by student gr. Economy Tazhibaev Ermek Almaty 2009 Plan Introduction 1. Definition of the concept of "simulation" 2. Simulation of reproduction processes in the oil and gas industry 3. Monte Carlo method as a kind of simulation 4. Example. Assessment of geological reserves Conclusion Introduction Both analytical and statistical models are widely used in operations research. Each of these types has advantages and disadvantages. Analytical models are coarser, take into account fewer factors, and always require some kind of assumptions and simplifications. On the other hand, the calculation results for them are easier to see, more clearly reflect the main regularities inherent in the phenomenon. And, most importantly, analytical models are more suited to finding optimal solutions. Statistical models, in comparison with analytical ones, are more accurate and detailed, do not require such rough assumptions, allow taking into account a large (in theory - an infinitely large) number of factors. But they also have their drawbacks: cumbersomeness, poor visibility, high consumption of computer time, and most importantly, the extreme difficulty of finding optimal solutions that have to be looked for "by touch" by guesswork and trial. The best work in operations research is based on the combined use of analytical and statistical models. The analytical model makes it possible to understand the phenomenon in general terms, to outline, as it were, the outline of the basic laws. Any refinements can be obtained using statistical models. Simulation is applied to processes in which human will can interfere from time to time. The person in charge of the operation can, depending on the current situation, make certain decisions, just as a chess player, looking at the board, chooses his next move. Then a mathematical model is set in motion, which shows how the situation is expected to change in response to this decision and what consequences it will lead to after some time. The next "current decision" is made taking into account the real new situation, and so on. As a result of repeated repetition of such a procedure, the manager, as it were, "gains experience", learns from his own and others' mistakes and gradually learns to make the right decisions - if not optimal, then almost optimal. 1.

Definition of "simulation modeling" In modern literature, there is no single point of view on what is meant by imitation modeling. So there are different interpretations: In the first, a simulation model is understood as a mathematical model in the classical sense; In the second, this term is retained only for those models in which random influences are played out (imitated) in one way or another; In the third, it is assumed that the simulation model differs from the usual mathematical one in a more detailed description, but the criterion by which one can say when the mathematical model ends and the simulation begins is not introduced; Simulation is applied to processes in which human will can interfere from time to time. The person in charge of the operation can, depending on the current situation, make certain decisions, just like a chess player looking at the board chooses his next move. Then a mathematical model is set in motion, which shows how the situation is expected to change in response to this decision and what consequences it will lead to after some time. The next current decision is made taking into account the real new situation, etc. As a result of repeated repetition of this procedure, the manager seems to "gain experience", learns from his own and others' mistakes and gradually learn to make the right decisions - if not optimal, then almost optimal. Let's try to illustrate the simulation process by comparing it with a classical mathematical model. Stages of the process of building a mathematical model of a complex system: 1. The main questions about the behavior of the system are formulated, the answers to which we want to get with the help of the model. 2. From the set of laws governing the behavior of the system, those are selected whose influence is significant in the search for answers to the questions posed. 3. In addition to these laws, if necessary, for the system as a whole or its individual parts, certain hypotheses about the functioning are formulated. Practice serves as the criterion for the adequacy of the model. Difficulties in building a mathematical model of a complex system: If the model contains many links between elements, various nonlinear constraints, a large number of parameters, etc. Real systems are often subject to the influence of random various factors, the accounting of which analytically presents very great difficulties, often insurmountable with a large number of them; The possibility of comparing the model and the original with this approach is available only at the beginning. These difficulties determine the use of simulation modeling. It is implemented in the following stages: 1. As before, the main questions about the behavior of a complex system are formulated, the answers to which we want to receive. 2. The system is decomposed into simpler parts-blocks. 3. Laws and "plausible" hypotheses regarding the behavior of both the system as a whole and its individual parts are formulated. 4. Depending on the questions posed to the researcher, the so-called system time is introduced, which simulates the course of time in a real system. 5. The necessary phenomenological properties of the system and its individual parts are specified in a formalized way. 6. The random parameters appearing in the model are compared with some of their implementations, which remain constant for one or more system time cycles. Next, new implementations are found. 2.

Simulation of reproduction processes in the oil and gas industry The current stage of development of the oil and gas industry is characterized by the increasing complexity of relations and interaction of natural, economic, organizational, environmental and other factors of production both at the level of individual enterprises and oil and gas producing regions, and at the industry-wide level. In the oil and gas industry, production is distinguished by long periods, the separation of the production and technological process in time (prospecting and exploration, development and construction, oil, gas and condensate production), the presence of lag shifts and delays, the dynamism of the resources used and other factors, the values of many of which are probabilistic nature. The values of these factors change systematically due to the commissioning of new fields, as well as the lack of confirmation of the expected results for those in development. This forces the enterprises of the oil and gas industry to periodically revise plans for the reproduction of fixed assets and redistribute resources in order to optimize the results of production and economic activities. When drawing up plans, significant assistance to persons preparing a draft economic decision can be provided by the use of methods of mathematical modeling, including simulation. The essence of these methods lies in the repeated reproduction of variants of planning decisions with subsequent analysis and selection of the most rational of them according to the established system of criteria. Using a simulation model, you can create a single structural diagram that integrates functional controls (strategic, tactical and operational planning) for the main production processes of the industry (prospecting, exploration, development, production, transportation, oil and gas processing). 3.

Monte Carlo method as a varietysimulation The date of birth of the Monte Carlo method is considered to be 1949, when an article titled "The Monte Carlo method" appeared. American mathematicians J. Neumann and S. Ulam are considered the creators of this method. In the USSR, the first articles on the Monte Carlo method were published in 1955-1956. It is curious that the theoretical basis of the method has been known for a long time. Moreover, some statistical problems were sometimes calculated using random samples, that is, in fact, by the Monte Carlo method. However, before the advent of electronic computers (computers), this method could not find any widespread use, because modeling random variables "manually is a very laborious work. Thus, the emergence of the Monte Carlo method as a very universal numerical method became possible only thanks to the appearance COMPUTER. The very name “Monte Carlo” comes from the city of Monte Carlo in the Principality of Monaco, famous for its gambling house. The idea of the method is extremely simple and it consists in the following. Instead of describing the process with the help of an analytical apparatus (differential or algebraic equations), a "drawing" of a random phenomenon is performed using a specially organized procedure that includes randomness and gives a random result. In reality, the concrete realization of a random process develops differently each time; similarly, as a result of statistical modeling, each time we get a new, different from the others implementation of the process under study. What can she give us? Nothing in itself, just like, say, one case of a patient cured with the help of some kind of medicine. It is another matter if there are many such implementations. This set of realizations can be used as some kind of artificially obtained statistical material that can be processed by the usual methods of mathematical statistics. After such processing, any characteristics of interest to us can be obtained: probabilities of events, mathematical expectations and variances of random variables, etc. When simulating random phenomena by the Monte Carlo method, we use randomness itself as a research apparatus, make it work for us. This is often easier than trying to build an analytical model. For complex operations in which a large number of elements (machines, people, organizations, auxiliary means) are involved, in which random factors are complexly intertwined, where the process is clearly not Markovian, the statistical modeling method, as a rule, turns out to be simpler than analytical (and often the only possible). In essence, any probabilistic problem can be solved by the Monte Carlo method, but it becomes justified only when the drawing procedure is simpler, and not more complicated than analytical calculation. Let's give an example when the Monte Carlo method is possible, but extremely unreasonable. Suppose, for example, three independent shots are fired at some target, each of which hits the target with a probability of 1/2. It is required to find the probability of at least one hit. An elementary calculation gives us the probability of at least one hit equal to 1 - (1/2) 3 = 7/8. The same problem can be solved by "drawing", statistical modeling. Instead of “three shots” we will throw “three coins”, counting, say, the coat of arms for a hit, and tails for a “miss”. The experiment is considered "successful" if at least one of the coins has a coat of arms. Let's make a lot of experiments, calculate the total number of "successes" and divide by the number N of experiments performed. Thus, we get the frequency of the event, and it is close to the probability for a large number of experiments. Well, what then? Such a technique could only be applied by a person who does not know the theory of probability at all, nevertheless, in principle, it is possible. The Monte Carlo method is a numerical method for solving mathematical problems by simulating random variables. Let's consider a simple example illustrating the method. Example 1. Suppose we need to calculate the area of a flat figure S. It can be an arbitrary figure with a curved border, defined graphically or analytically, connected or consisting of several pieces. Let it be the figure shown in fig. 1, and assume that it is all located within the unit square. Choose N random points inside the square. Let F denote the number of points that fall inside S. It is geometrically obvious that the area of S is approximately equal to the ratio F / N. The larger N, the greater the accuracy of this estimate. Two features of the Monte Carlo method. The first feature of the method is the simple structure of the computational algorithm. The second feature of the method is the calculation error, as a rule, proportional to D / N2, where D is some constant, N is the number of tests. This shows that in order to reduce the error by a factor of 10 (in other words, to get one more correct decimal point in the answer), you need to increase N (that is, the amount of work) by a factor of 100. It is clear that it is impossible to achieve high accuracy in this way. Therefore, it is usually said that the Monte Carlo method is especially effective in solving those problems in which the result is needed with a small accuracy (5-10%). The way to use the Monte Carlo method is, in theory, quite simple. To obtain an artificial random sample from a set of quantities described by a certain probability distribution function, one should: 1. Construct a graph or table of the cumulative distribution function based on a series of numbers reflecting the process under study (and not on the basis of a series of random numbers), and the values of the random process variable are plotted along the abscissa axis (x), and the probability values (from 0 to 1) - along the ordinate (y). 2.Using a random number generator, select a random decimal number in the range from 0 to 1 (with the required number of digits). 3. Draw a horizontal line from the point on the ordinate corresponding to the selected random number, to the intersection with the probability distribution curve. 4. From this intersection point the perpendicular to the abscissa axis. b. Repeat steps 2-5 for all required random variables, following the order in which they were written. The general meaning is easy to understand with a simple example: the number of calls to the telephone exchange within 1 minute corresponds to the following distribution: Number of calls Probability Cumulative probability O 0.10 0.10 Suppose we want to conduct a thought experiment for five time periods. Let's build a graph of the cumulative probability distribution. Using the random number generator, we get five numbers, each of which is used to determine the number of calls in a given time interval. Time period Random number Number of calls Taking a few more such samples, we can make sure that if the numbers used are really evenly distributed, then each of the values of the investigated quantity will appear with the same frequency as in the unreal world ", and we will get the results typical for the behavior of the system under investigation. Let's go back to the example. To calculate, we had to select random points in a unit square. How to do it physically? Let's imagine such an experiment. Fig. 1. (on a larger scale) with an S and a square hung on the wall as a target. The shooter, who was at some distance from the wall, shoots N times, aiming at the center of the square. Of course, all the bullets will not land exactly in the center: they will hit N random points on the target. Is it possible to estimate the area S. It is clear that with a high qualification of the shooter, the result of the experiment will be very poor, since almost all the bullets will fall near the center and hit S. It is easy to understand that our method of calculating the area will be valid only when the random points are not just “random”, but also “evenly scattered” over the whole square. In operations research problems, the Monte Carlo method is used in three main roles: 1) when modeling complex, complex operations, where there are many interacting random factors; 2) when checking the applicability of simpler, analytical methods and clarifying the conditions for their applicability; 3) in order to develop amendments to analytical formulas such as "empirical formulas" in technology. 4.